大多数网站站长都欢迎搜索引擎,如谷歌和Bing的网络蜘蛛。这样,他们的网站内容可以很容易通过搜索引擎被用户进行搜索时找到。但是他们肯定不会欢迎你的网络蜘蛛从他们的网站提取数据,并认为你不怀好意,比如etsy.com产品被用来引流到一个香港网站。如果网站管理员发现不明网络蜘蛛积极抓取他们的网站,你的IP可能被阻止。2001年,易趣采取法律行动对付用网络蜘蛛抓取的拍卖网站Bidder's Edge,控告他们“深层链接”其商品,并轰击它的服务器; Craigslist网站具有节流机制,以防止网络爬虫铺天盖地的向网站提出请求.

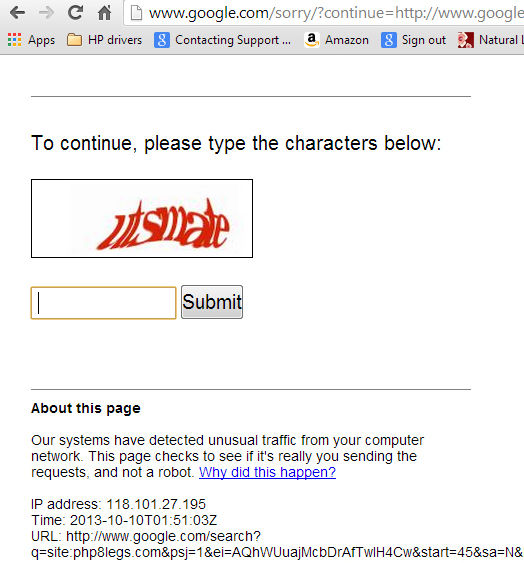

即使大蜘蛛如谷歌也有机制防止其他人提取他们的内容。不信的话,你可尝试搜索一些关键字,并在搜索结果网页上,单击第1页,然后第2页,第3页......在20页(我的情况) ,谷歌停止显示搜索结果,并要确认你是人类。如果您无法输入正确验证码,那么你的IP最终会被阻止。

Note: Check out the sample code at bottom of this article.

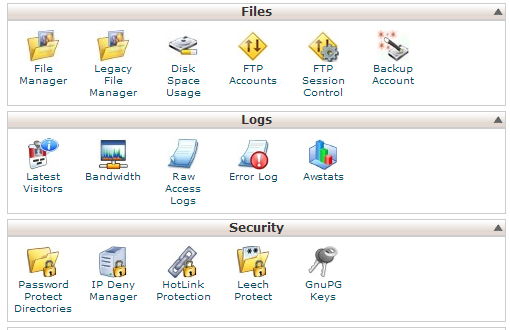

那我们如何在雷达监视中低空飞行?首先,让我们执行之前的脚本,然后明白我们网络蜘蛛如何被发现。 登录cPanel和点击“最近访问” (Latest Visitors)。

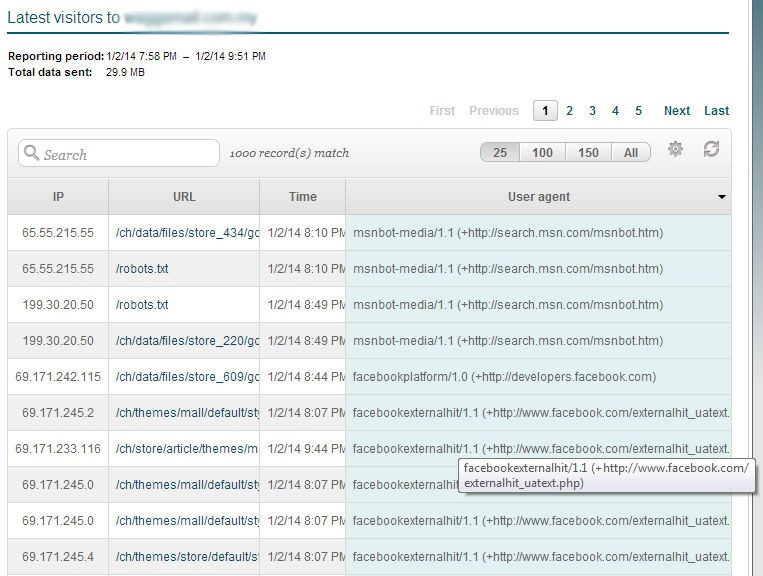

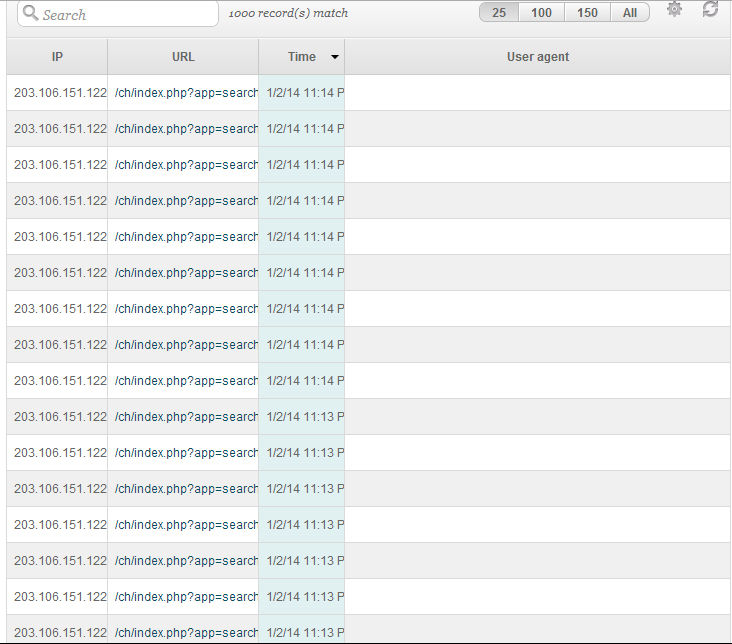

以下面图表, 我只显示IP , URL ,时间和用户代理。

我执行了蜘蛛脚本来抓取这个网站。点击“时间”(Time)菜单。

执行脚本时,我的动态IP是203.106.151.122。 任何网站管理员可以轻易检测出相同的IP ,没有用户代理,并在一分钟内要求多个页面。我们可以隐藏我们的网络蜘蛛。

这里是一些规则和脚本的更改:

1 )在执行刮尊重网站

不要超载目标服务器,消耗过多带宽。网络管理员将能够探测到你的IP和用户代理的日志文件。他们会看到短时间内连续向服务器的请求。

我们可以插入随机请求之间的等待时间,从几秒钟到几分钟。当然,你的脚本将需要更长的时间才能完成,但被侦查到风险较小。

在我们之前的脚本, extract.php, 加入sleep(rand(15, 45));

for($i=1; $i <= $lastPage; $i++) {

$targetPage = $target . $i;

$pages->get($targetPage, PARSE_CONTENT);

sleep(rand(15, 45)); // delay 15 to 45 seconds to prevent detection by system admin

}

脚本将在每个请求之间暂停至少15秒或最久45秒。

2 )为每个请求随机化用户代理

很多ISP使用动态主机配置协议(DHCP ),其中相同的IP是由多个用户共享。这样,网站管理员很难通过用户名代理来检测网络蜘蛛。因为它看起来像多个用户从一个ISP浏览同一个网站。

private function getRandomAgent() {

$agents = array('Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/31.0.1650.63 Safari/537.36',

'Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)',

'Mozilla/5.0 (Windows NT 6.1; WOW64; rv:21.0) Gecko/20100101 Firefox/21.0',

'Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; QQDownload 718; .NET CLR 2.0.50727; .NET CLR 3.0.4506.2152; .NET CLR 3.5.30729)',

'Mozilla/5.0 (compatible; bingbot/2.0; +http://www.bing.com/bingbot.htm)',

'Mozilla/5.0 (X11; U; Linux x86_64; en-US; rv:1.9) Gecko Minefield/3.0',

'Mozilla/5.0 (Windows; U; Windows NT 5.1; en-US; rv:1.9.2) Gecko/20100115 Firefox/3.6',

'Mozilla/5.0 (iPhone; CPU iPhone OS 5_1_1 like Mac OS X) AppleWebKit/534.46 (KHTML, like Gecko) Mobile/9B206',

'Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Trident/5.0; MATP; MATP)',

'Mozilla/5.0 (Windows NT 6.1; rv:22.0) Gecko/20100101 Firefox/22.0');

$agent = $agents[array_rand($agents)];

return $agent;

}

在httpcurl.php 的类HttpCurl添加函数getRandomAgent().

protected function request($url) {

$ch = curl_init($url);

$agent = $this->getRandomAgent();

curl_setopt($ch, CURLOPT_USERAGENT, $agent);

curl_setopt($ch, CURLOPT_FOLLOWLOCATION, TRUE);

curl_setopt($ch, CURLOPT_MAXREDIRS, 5);

curl_setopt($ch, CURLOPT_RETURNTRANSFER, TRUE);

curl_setopt($ch, CURLOPT_URL, $url);

$this->_body = curl_exec($ch);

$this->_info = curl_getinfo($ch);

$this->_error = curl_error($ch);

curl_close($ch);

}

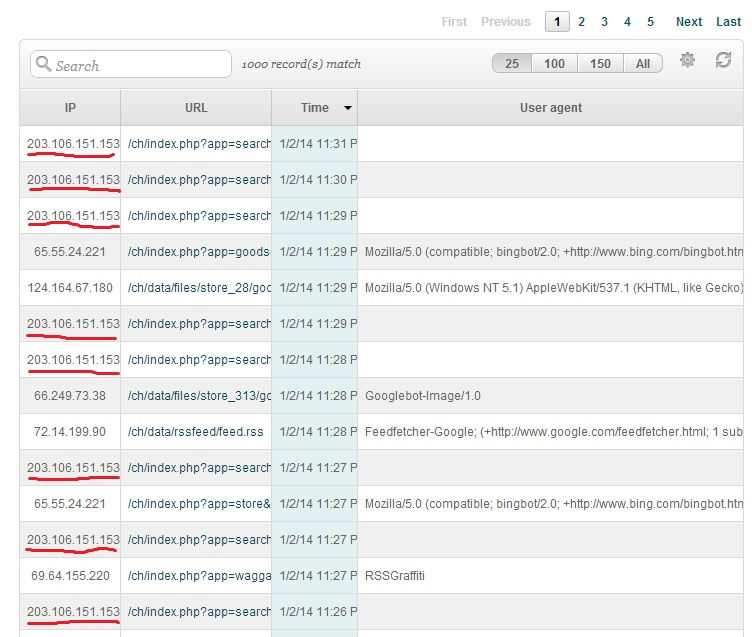

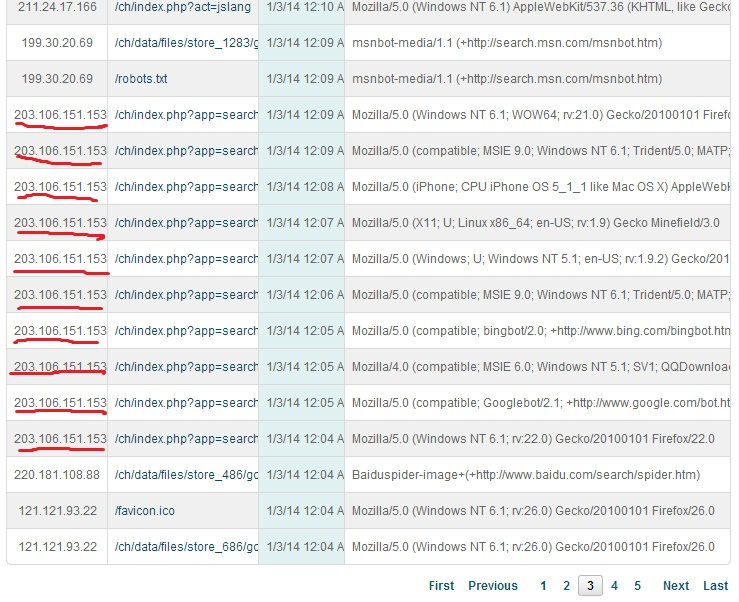

在函数request()中加入两行: $agent = $this->getRandomAgent() 和 curl_setopt($ch, CURLOPT_USERAGENT, $agent)。现在看看执行结果。

在PHP编程里,我们不能够伪装IP地址--所以我们无法发给目标服务器伪造的IP地址,然后用PHP/cURL取得网页源文件。如果你不希望您的IP被记录在目标服务器,可以通过代理服务器运行网络蜘蛛脚本。我这里给你看到的是免费代理服务器,您可能会遇到连接稳定性,速度等问题。如果你对这个行业认真,可以使用付费代理。 可参考Michael Schrenk 书Webbots, Spider, Screen Scraper(第2版)中第27章的代理服务器。

在PHP编程里,我们不能够伪装IP地址--所以我们无法发给目标服务器伪造的IP地址,然后用PHP/cURL取得网页源文件。如果你不希望您的IP被记录在目标服务器,可以通过代理服务器运行网络蜘蛛脚本。我这里给你看到的是免费代理服务器,您可能会遇到连接稳定性,速度等问题。如果你对这个行业认真,可以使用付费代理。 可参考Michael Schrenk 书Webbots, Spider, Screen Scraper(第2版)中第27章的代理服务器。

有了代理服务器,那么你可以通过不同的服务器的执行请求。

private function getRandomProxy() {

$proxies = array('202.187.160.140:3128',

'175.139.208.131:3128',

'60.51.218.180:8080');

$proxy = $proxies[array_rand($proxies)];

return $proxy;

}

在httpcurl.php的类HttpCurl加入函数getRandomProxy()。

protected function request($url) {

$ch = curl_init($url);

$agent = $this->getRandomAgent();

curl_setopt($ch, CURLOPT_USERAGENT, $agent);

$proxy = $this->getRandomProxy();

curl_setopt($ch, CURLOPT_PROXY, $proxy);

curl_setopt($ch, CURLOPT_FOLLOWLOCATION, TRUE);

curl_setopt($ch, CURLOPT_MAXREDIRS, 5);

curl_setopt($ch, CURLOPT_RETURNTRANSFER, TRUE);

curl_setopt($ch, CURLOPT_URL, $url);

$this->_body = curl_exec($ch);

$this->_info = curl_getinfo($ch);

$this->_error = curl_error($ch);

curl_close($ch);

}

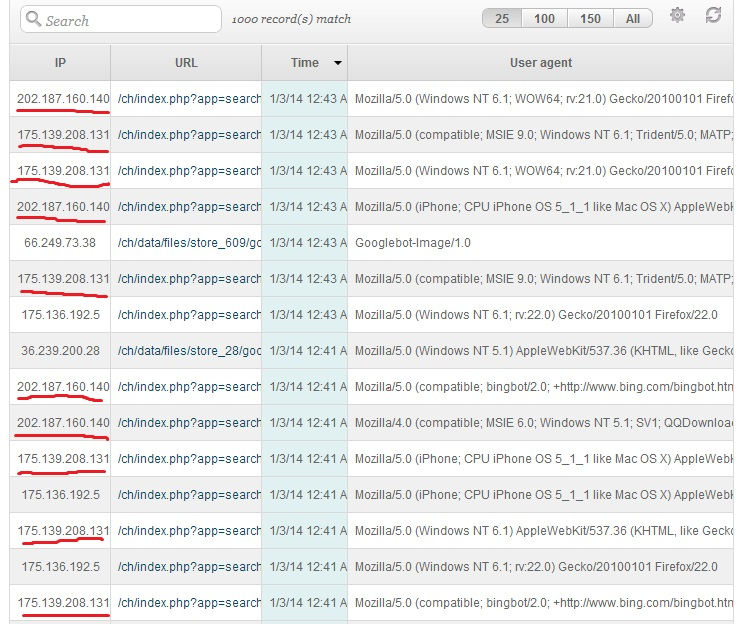

现在看看执行结果。

现在,系统管理员无法再找到你的IP地址啦!网络蜘蛛隐身术大功告成!

但如IP 60.51.218.180在那里呢?这是因为cURL无法使用这免费代理伺服器。在这种情况下,cURL不能够连接该IP取得源页面卷曲。 在这种情况下curl_exec($ch)返回0。 因此,我们需要修改的脚本,然后重试不同的代理。

如果你没有代理服务器,可以在免费WiFi的咖啡厅买咖啡,坐在闭路电视的盲点,然后悄悄地运行你的脚本!

还有比如说发送的顺序,从第1 ,2, 3至最后一页,你可以稍微调整等诸多步骤 -- 可以预先收集URL,存储到MySQL。然后,脚本可随机获取URL的请求。

但是,不要以为有了隐身术就没有人可以追踪你。完善的大型网站有先进的工具来分析他们的流量,天涯海角都能把你找出来!

请建设性地使用这些工具,祝你好运!

Code:

1. httpcurl.php

<?php

// Class HttpCurl

class HttpCurl {

protected $_cookie, $_parser, $_timeout;

private $_ch, $_info, $_body, $_error;

// Check curl activated

// Set Parser as well

public function __construct($p = null) {

if (!function_exists('curl_init')) {

throw new Exception('cURL not enabled!');

}

$this->setParser($p);

}

// Get web page and run parser

public function get($url, $status = FALSE) {

$this->request($url);

if ($status === TRUE) {

return $this->runParser($this->_body, $this->getStatus());

}

}

// Run cURL to get web page source file

protected function request($url) {

$ch = curl_init($url);

$agent = $this->getRandomAgent();

curl_setopt($ch, CURLOPT_USERAGENT, $agent);

$proxy = $this->getRandomProxy();

curl_setopt($ch, CURLOPT_PROXY, $proxy);

curl_setopt($ch, CURLOPT_FOLLOWLOCATION, TRUE);

curl_setopt($ch, CURLOPT_MAXREDIRS, 5);

curl_setopt($ch, CURLOPT_RETURNTRANSFER, TRUE);

curl_setopt($ch, CURLOPT_URL, $url);

$this->_body = curl_exec($ch);

$this->_info = curl_getinfo($ch);

$this->_error = curl_error($ch);

curl_close($ch);

}

// Get http_code

public function getStatus() {

return $this->_info[http_code];

}

// Get web page header information

public function getHeader() {

return $this->_info;

}

// Get web page content

public function getBody() {

return $this->_body;

}

public function __destruct() {

}

// set parser, either object or callback function

public function setParser($p) {

if ($p === null || $p instanceof HttpScraper || is_callable($p))

$this->_parser = $p;

}

// Execute parser

public function runParser($content, $header) {

if ($this->_parser !== null)

{

if ($this->_parser instanceof HttpScraper)

$this->_parser->parse($content, $header);

else

call_user_func($this->_parser, $content, $header);

}

}

private function getRandomAgent() {

$agents = array('Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/31.0.1650.63 Safari/537.36',

'Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)',

'Mozilla/5.0 (Windows NT 6.1; WOW64; rv:21.0) Gecko/20100101 Firefox/21.0',

'Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; QQDownload 718; .NET CLR 2.0.50727; .NET CLR 3.0.4506.2152; .NET CLR 3.5.30729)',

'Mozilla/5.0 (compatible; bingbot/2.0; +http://www.bing.com/bingbot.htm)',

'Mozilla/5.0 (X11; U; Linux x86_64; en-US; rv:1.9) Gecko Minefield/3.0',

'Mozilla/5.0 (Windows; U; Windows NT 5.1; en-US; rv:1.9.2) Gecko/20100115 Firefox/3.6',

'Mozilla/5.0 (iPhone; CPU iPhone OS 5_1_1 like Mac OS X) AppleWebKit/534.46 (KHTML, like Gecko) Mobile/9B206',

'Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Trident/5.0; MATP; MATP)',

'Mozilla/5.0 (Windows NT 6.1; rv:22.0) Gecko/20100101 Firefox/22.0');

$agent = $agents[array_rand($agents)];

return $agent;

}

private function getRandomProxy() {

$proxies = array('202.187.160.140:3128',

'175.139.208.131:3128',

'60.51.218.180:8080');

$proxy = $proxies[array_rand($proxies)];

return $proxy;

}

}

?>

2. image.php

<?php

class Image {

private $_image;

private $_imageFormat;

public function load($imageFile) {

$imageInfo = getImageSize($imageFile);

$this->_imageFormat = $imageInfo[2];

if( $this->_imageFormat === IMAGETYPE_JPEG ) {

$this->_image = imagecreatefromjpeg($imageFile);

} elseif( $this->_imageFormat === IMAGETYPE_GIF ) {

$this->_image = imagecreatefromgif($imageFile);

} elseif( $this->_imageFormat === IMAGETYPE_PNG ) {

$this->_image = imagecreatefrompng($imageFile);

}

}

public function save($imageFile, $_imageFormat=IMAGETYPE_JPEG, $compression=75, $permissions=null) {

if( $_imageFormat == IMAGETYPE_JPEG ) {

imagejpeg($this->_image,$imageFile,$compression);

} elseif ( $_imageFormat == IMAGETYPE_GIF ) {

imagegif($this->_image,$imageFile);

} elseif ( $_imageFormat == IMAGETYPE_PNG ) {

imagepng($this->_image,$imageFile);

}

if( $permissions != null) {

chmod($imageFile,$permissions);

}

}

public function getWidth() {

return imagesx($this->_image);

}

public function getHeight() {

return imagesy($this->_image);

}

public function resizeToHeight($height) {

$ratio = $height / $this->getHeight();

$width = $this->getWidth() * $ratio;

$this->resize($width,$height);

}

public function resizeToWidth($width) {

$ratio = $width / $this->getWidth();

$height = $this->getheight() * $ratio;

$this->resize($width,$height);

}

public function scale($scale) {

$width = $this->getWidth() * $scale/100;

$height = $this->getheight() * $scale/100;

$this->resize($width,$height);

}

private function resize($width, $height) {

$newImage = imagecreatetruecolor($width, $height);

imagecopyresampled($newImage, $this->_image, 0, 0, 0, 0, $width, $height, $this->getWidth(), $this->getHeight());

$this->_image = $newImage;

}

}

?>

3. scraper.php

<?php

/********************************************************

* These are website specific matching pattern *

* Change these matching patterns for each websites *

* Else you will not get any results *

********************************************************/

define('TARGET_BLOCK','~<div class="negotiators-wrapper">(.*?)</div>(\r\n)</div>~s');

define('NAME', '~<div class="negotiators-name"><a href="/negotiator/(.*?)">(.*?)</a></div>~');

define('EMAIL', '~<div class="negotiators-email">(.*?)</div>~');

define('PHONE', '~<div class="negotiators-phone">(.*?)</div>~');

define('LASTPAGE', '~<li class="pager-last last"><a href="/negotiators\?page=(.*?)"~');

define('IMAGE', '~<div class="negotiators-photo"><a href="/negotiator/(.*?)"><img src="/(.*?)"~');

define('PARSE_CONTENT', TRUE);

define('IMAGE_DIR', 'c:\\xampp\\htdocs\\scraper\\image\\');

// Interface MySQLTable

interface MySQLTable {

public function addData($info);

}

// Class EmailDatabase

// Use the code below to crease table

/*****************************************************

CREATE TABLE IF NOT EXISTS `contact_info` (

`id` int(12) NOT NULL AUTO_INCREMENT,

`name` varchar(128) NOT NULL,

`email` varchar(128) NOT NULL,

`phone` varchar(128) NOT NULL,

PRIMARY KEY (`id`),

UNIQUE KEY `email` (`email`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

*******************************************************/

class EmailDatabase extends mysqli implements MySQLTable {

private $_table = 'contact_info'; // set default table

// Connect to database

public function __construct() {

$host = 'localhost';

$user = 'root';

$pass = '';

$dbname = 'email_collection';

parent::__construct($host, $user, $pass, $dbname);

}

// Use this function to change to another table

public function setTableName($name) {

$this->_table = $name;

}

// Write data to table

public function addData($info) {

$sql = 'INSERT IGNORE INTO ' . $this->_table . ' (name, email, phone, image) ';

$sql .= 'VALUES (\'' . $info[name] . '\', \'' . $info[email] . '\', \'' . $info[phone]. '\', \'' . $info[image] .'\')';

return $this->query($sql);

}

// Execute MySQL query here

public function query($query, $mode = MYSQLI_STORE_RESULT) {

$this->ping();

$res = parent::query($query, $mode);

return $res;

}

}

// Interface HttpScraper

interface HttpScraper

{

public function parse($body, $head);

}

// Class Scraper

class Scraper implements HttpScraper {

private $_table;

// Store MySQL table if want to write to database.

public function __construct($t = null) {

$this->setTable($t);

}

// Delete table info at descructor

public function __destruct() {

if ($this->_table !== null) {

$this->_table = null;

}

}

// Set table info to private variable $_table

public function setTable($t) {

if ($t === null || $t instanceof MySQLTable)

$this->_table = $t;

}

// Get table info

public function getTable() {

return $this->_table;

}

// Parse function

public function parse($body, $head) {

if ($head == 200) {

$p = preg_match_all(TARGET_BLOCK, $body, $blocks);

if ($p) {

foreach($blocks[0] as $block) {

$agent[name] = $this->matchPattern(NAME, $block, 2);

$agent[email] = $this->matchPattern(EMAIL, $block, 1);

$agent[phone] = $this->matchPattern(PHONE, $block, 1);

$originalImagePath = $this->matchPattern(IMAGE, $block, 2);

$agent[image] = $this->saveImage($originalImagePath, IMAGETYPE_GIF);

$this->_table->addData($agent);

}

}

}

}

// Return matched info

public function matchPattern($pattern, $content, $pos) {

if (preg_match($pattern, $content, $match)) {

return $match[$pos];

}

}

public function saveImage($imageUrl, $imageType = 'IMAGETYPE_GIF') {

if (!file_exists(IMAGE_DIR)) {

mkdir(IMAGE_DIR, 0777, true);

}

if( $imageType === IMAGETYPE_JPEG ) {

$fileExt = 'jpg';

} elseif ( $imageType === IMAGETYPE_GIF ) {

$fileExt = 'gif';

} elseif ( $imageType === IMAGETYPE_PNG ) {

$fileExt = 'png';

}

$newImageName = md5($imageUrl). '.' . $fileExt;

$image = new Image();

$image->load($imageUrl);

$image->resizeToWidth(100);

$image->save( IMAGE_DIR . $newImageName, $imageType );

return $newImageName;

}

}

?>

4. extract.php

<?php

include 'image.php';

include 'scraper.php';

include 'httpcurl.php'; // include lib file

$target = "http://<domain name>/negotiators?page="; // Set our target's url, remember not to include nu,ber in pagination

$startPage = $target . "1"; // Set first page

$scrapeContent = new Scraper;

$firstPage = new HttpCurl();

$firstPage->get($startPage); // get first page content

if ($firstPage->getStatus() === 200) {

$lastPage = $scrapeContent->matchPattern(LASTPAGE, $firstPage->getBody(), 1); // get total page info from first page

}

$db = new EmailDatabase(); // can be excluded if do not want to write to database

$scrapeContent = new Scraper($db); // // can be excluded as well

$pages = new HttpCurl($scrapeContent);

// Looping from first page to last and parse each and every pages to database

for($i=1; $i <= $lastPage; $i++) {

$targetPage = $target . $i;

$pages->get($targetPage, PARSE_CONTENT);

sleep(rand(15, 45)); // delay 15 to 25 seconds to prevent detection by system admin

}

?>