这是PHP网络蜘蛛提取Email地址脚本最后一章, 我将展示如何把收集到的资料储存在MySQL数据库。您可以把从多个网站收集到的资料储存在同一个数据库。

您也可以把资料分类。比如说您从产业网站及购物网站收集的资料分开储存在不同的数据库表。

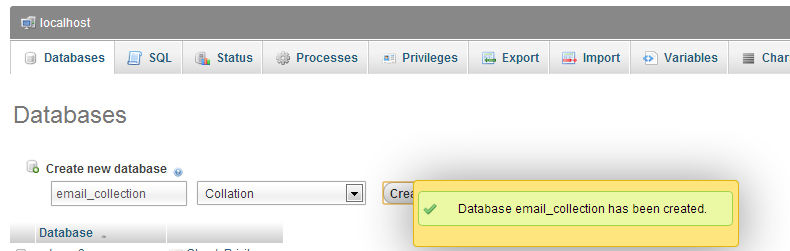

首先在您的电脑运行XAMPP,并确保激活Apache及MySQL。在浏览器输入URL "http://localhost/phpmyadmin/"。在顶部菜单栏选择"Database"。在这教程里我们的数据库为"email_collection"。 按"Create"钮可看到如下 图般。

您可在此下戴PHP cURL邮址提取脚本。

Note: Check out the sample code at bottom of this article.

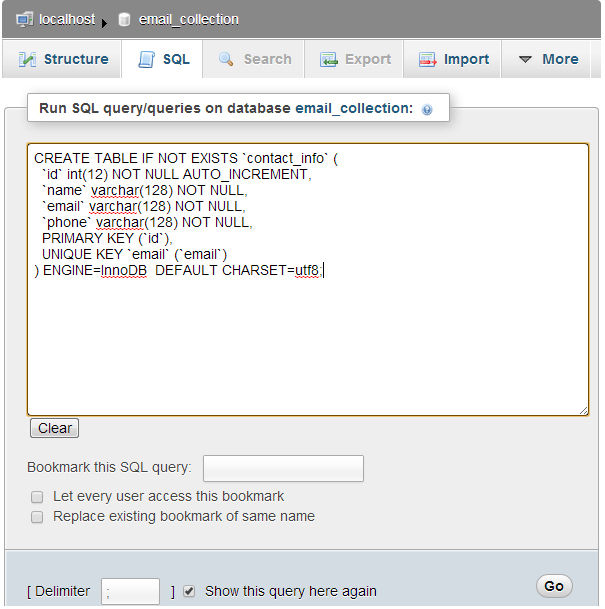

进入"email_collection"数据库,可看到空库。选择"SQL", 把以下查询置入空白地方,按"Go"。

CREATE TABLE IF NOT EXISTS `contact_info` ( `id` int(12) NOT NULL AUTO_INCREMENT, `name` varchar(128) NOT NULL, `email` varchar(128) NOT NULL, `phone` varchar(128) NOT NULL, PRIMARY KEY (`id`), UNIQUE KEY `email` (`email`) ) ENGINE=InnoDB DEFAULT CHARSET=utf8;

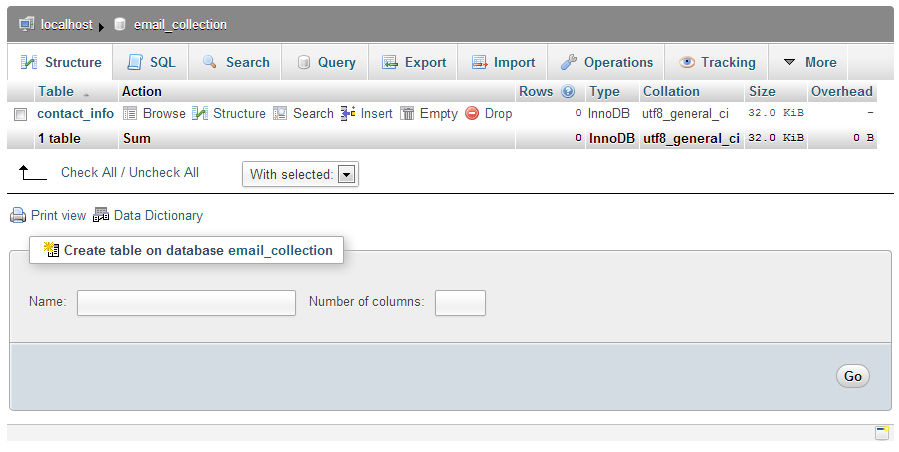

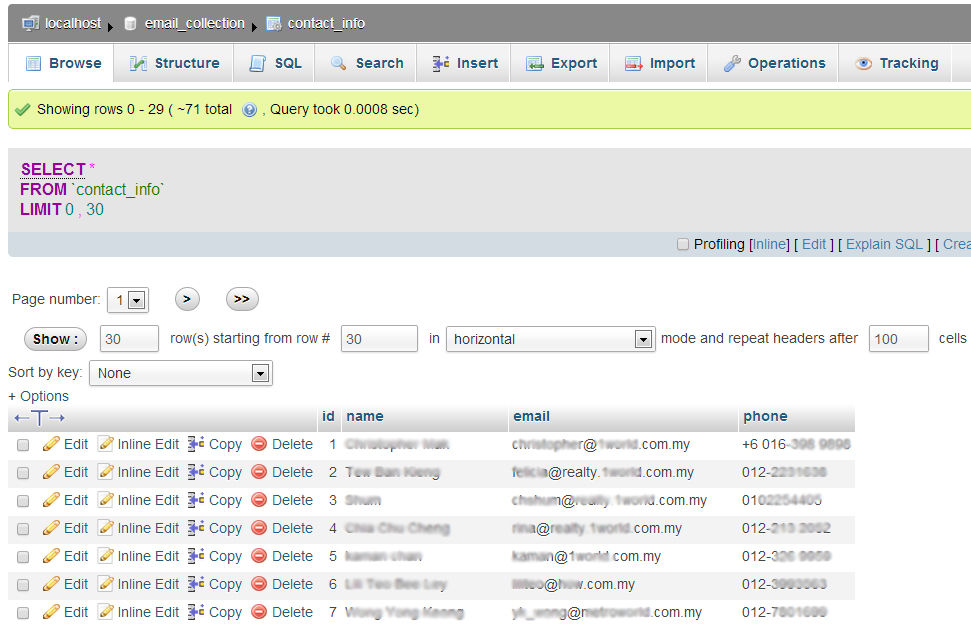

现您在"email_collection"数据库创建了"contact_info"数据库。

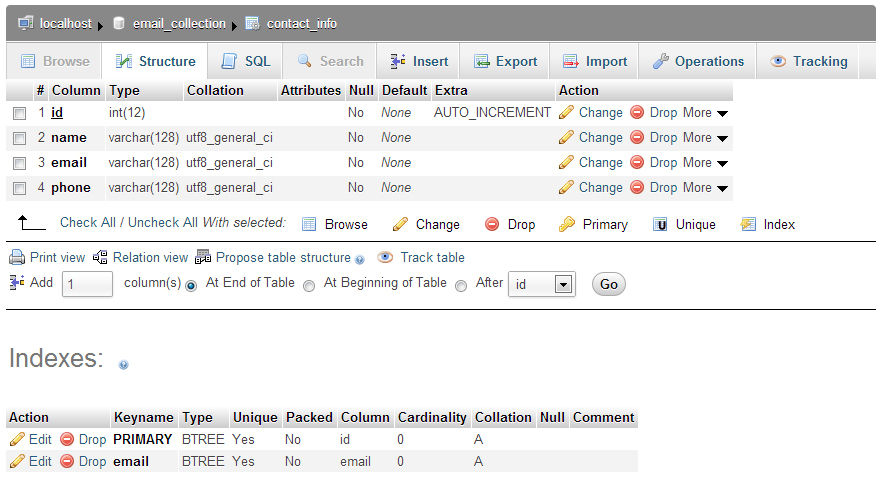

点击进入“contact_info”,内有4个数据列。在这里,每次一个新的条目( 相关的“name”,“email”和“phone”)添加到数据库表中列“id”会自动递增。“email”数据列被定义为“UNIQUE_KEY”,相同的电子邮件将不会存储在数据库中。例如,当你运行脚本于其他网站上,相同的电子邮件可能会在不同网站发现。在这种情况下,我们只是保持第一次被储存的资料,而忽略其他的。

这里我修改了httpcurl.php.

<?php

define('TARGET_BLOCK','~<div class="negotiators-wrapper">(.*?)</div>(\r\n)</div>~s');

define('NAME', '~<div class="negotiators-name"><a href="/negotiator/(.*?)">(.*?)</a></div>~');

define('EMAIL', '~<div class="negotiators-email">(.*?)</div>~');

define('PHONE', '~<div class="negotiators-phone">(.*?)</div>~');

define('LASTPAGE', '~<li class="pager-last last"><a href="/negotiators\?page=(.*?)"~');

define('PARSE_CONTENT', TRUE);

interface MySQLTable {

public function addData($info);

}

class EmailDatabase extends mysqli implements MySQLTable {

private $_table = 'contact_info';

public function __construct() {

$host = 'localhost';

$user = 'root';

$pass = '';

$dbname = 'email_collection';

parent::__construct($host, $user, $pass, $dbname);

}

public function setTableName($name) {

$this->_table = $name;

}

public function addData($info) {

$sql = 'INSERT IGNORE INTO ' . $this->_table . ' (name, email, phone) ';

$sql .= 'VALUES (\'' . $info[name] . '\', \'' . $info[email] . '\', \'' . $info[phone]. '\')';

return $this->query($sql);

}

public function query($query, $mode = MYSQLI_STORE_RESULT) {

$this->ping();

$res = parent::query($query, $mode);

return $res;

}

}

interface HttpScraper

{

public function parse($body, $head);

}

class Scraper implements HttpScraper

{

private $_table;

public function __construct($t = null) {

$this->setTable($t);

}

public function __destruct() {

if ($this->_table !== null) {

$this->_table = null;

}

}

public function setTable($t) {

if ($t === null || $t instanceof MySQLTable)

$this->_table = $t;

}

public function getTable() {

return $this->_table;

}

public function parse($body, $head) {

if ($head == 200) {

$p = preg_match_all(TARGET_BLOCK, $body, $blocks);

if ($p) {

foreach($blocks[0] as $block) {

$agent[name] = $this->matchPattern(NAME, $block, 2);

$agent[email] = $this->matchPattern(EMAIL, $block, 1);

$agent[phone] = $this->matchPattern(PHONE, $block, 1);

// echo "<pre>"; print_r($agent); echo "</pre>";

$this->_table->addData($agent);

}

}

}

}

public function matchPattern($pattern, $content, $pos) {

if (preg_match($pattern, $content, $match)) {

return $match[$pos];

}

}

}

class HttpCurl {

protected $_cookie, $_parser, $_timeout;

private $_ch, $_info, $_body, $_error;

public function __construct($p = null) {

if (!function_exists('curl_init')) {

throw new Exception('cURL not enabled!');

}

$this->setParser($p);

}

public function get($url, $status = FALSE) {

$this->request($url);

if ($status === TRUE) {

return $this->runParser($this->_body, $this->getStatus());

}

}

protected function request($url) {

$ch = curl_init($url);

curl_setopt($ch, CURLOPT_FOLLOWLOCATION, TRUE);

curl_setopt($ch, CURLOPT_MAXREDIRS, 5);

curl_setopt($ch, CURLOPT_RETURNTRANSFER, TRUE);

curl_setopt($ch, CURLOPT_URL, $url);

$this->_body = curl_exec($ch);

$this->_info = curl_getinfo($ch);

$this->_error = curl_error($ch);

curl_close($ch);

}

public function getStatus() {

return $this->_info[http_code];

}

public function getHeader() {

return $this->_info;

}

public function getBody() {

return $this->_body;

}

public function __destruct() {

}

public function setParser($p) {

if ($p === null || $p instanceof HttpScraper || is_callable($p))

$this->_parser = $p;

}

public function runParser($content, $header) {

if ($this->_parser !== null)

{

if ($this->_parser instanceof HttpScraper)

$this->_parser->parse($content, $header);

else

call_user_func($this->_parser, $content, $header);

}

}

}

?>

程序里增加了新的类"EmailDatabase"。这类有个函数addData(),并实现MySQLTable接口。

类"EmailDatabase"的构造函数通过用户名及密码连接MySQL。在XAMPP环境里,host是localhost, username 是 root 及password为空,不需要密码。

public function __construct() {

$host = 'localhost';

$user = 'root';

$pass = '';

$dbname = 'email_collection';

parent::__construct($host, $user, $pass, $dbname);

}

addData()将阵列$info的姓名,电子邮件和电话信息写入数据库表“contact_info”。

public function addData($info) {

$sql = 'INSERT IGNORE INTO ' . $this->_table . ' (name, email, phone) ';

$sql .= 'VALUES (\'' . $info[name] . '\', \'' . $info[email] . '\', \'' . $info[phone]. '\')';

return $this->query($sql);

}

public function query($query, $mode = MYSQLI_STORE_RESULT) {

$this->ping();

$res = parent::query($query, $mode);

return $res;

}

我也稍微修改了Scraper类。

private $_table;

public function __construct($t = null) {

$this->setTable($t);

}

public function __destruct() {

if ($this->_table !== null) {

$this->_table = null;

}

}

public function setTable($t) {

if ($t === null || $t instanceof MySQLTable)

$this->_table = $t;

}

Scraper类的构造函数初始化时设置数据库表。

函数 parse()也有变动,加入了函数addData() ,取得资料后写入数据库表。

$this->_table->addData($agent);

我们的 test.php:

<?php

include 'httpcurl.php';

$target = "http://<domain name>/negotiators?page=";

$startPage = $target . "1";

$scrapeContent = new Scraper;

$firstPage = new HttpCurl();

$firstPage->get($startPage);

if ($firstPage->getStatus() === 200) {

$lastPage = $scrapeContent->matchPattern(LASTPAGE, $firstPage->getBody(), 1);

}

$db = new EmailDatabase;

$scrapeContent = new Scraper($db);

$pages = new HttpCurl($scrapeContent);

for($i=1; $i <= $lastPage; $i++) {

$targetPage = $target . $i;

$pages->get($targetPage, PARSE_CONTENT);

}

?>

只有小小变动。用类EmailDatabase创建对象$db然后传 $scrapeContent.

$db = new EmailDatabase; $scrapeContent = new Scraper($db); $pages = new HttpCurl($scrapeContent);

运行程序时所有数据将被保存到数据库。

在这网站中我提取了超过1600++电邮资料。

如要运行在被的网站,通常只须修改这部份。

define('TARGET_BLOCK','~<div class="negotiators-wrapper">(.*?)</div>(\r\n)</div>~s');

define('NAME', '~<div class="negotiators-name"><a href="/negotiator/(.*?)">(.*?)</a></div>~');

define('EMAIL', '~<div class="negotiators-email">(.*?)</div>~');

define('PHONE', '~<div class="negotiators-phone">(.*?)</div>~');

define('LASTPAGE', '~<li class="pager-last last"><a href="/negotiators\?page=(.*?)"~');

define('PARSE_CONTENT', TRUE);

这就是我们的PHP email提取脚本。记得,不要用来发垃圾邮件!

至此,我们的脚本只提取文字资料,下一章我会用同样脚本提取图像。

Code:

1. httpcurl.php

<?php

/********************************************************

* These are website specific matching pattern *

* Change these matching patterns for each websites *

* Else you will not get any results *

********************************************************/

define('TARGET_BLOCK','~<div class="negotiators-wrapper">(.*?)</div>(\r\n)</div>~s');

define('NAME', '~<div class="negotiators-name"><a href="/negotiator/(.*?)">(.*?)</a></div>~');

define('EMAIL', '~<div class="negotiators-email">(.*?)</div>~');

define('PHONE', '~<div class="negotiators-phone">(.*?)</div>~');

define('LASTPAGE', '~<li class="pager-last last"><a href="/negotiators\?page=(.*?)"~');

define('PARSE_CONTENT', TRUE);

// Interface MySQLTable

interface MySQLTable {

public function addData($info);

}

// Class EmailDatabase

// Use the code below to crease table

/*****************************************************

CREATE TABLE IF NOT EXISTS `contact_info` (

`id` int(12) NOT NULL AUTO_INCREMENT,

`name` varchar(128) NOT NULL,

`email` varchar(128) NOT NULL,

`phone` varchar(128) NOT NULL,

PRIMARY KEY (`id`),

UNIQUE KEY `email` (`email`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

*******************************************************/

class EmailDatabase extends mysqli implements MySQLTable {

private $_table = 'contact_info'; // set default table

// Connect to database

public function __construct() {

$host = 'localhost';

$user = 'root';

$pass = '';

$dbname = 'email_collection';

parent::__construct($host, $user, $pass, $dbname);

}

// Use this function to change to another table

public function setTableName($name) {

$this->_table = $name;

}

// Write data to table

public function addData($info) {

$sql = 'INSERT IGNORE INTO ' . $this->_table . ' (name, email, phone) ';

$sql .= 'VALUES (\'' . $info[name] . '\', \'' . $info[email] . '\', \'' . $info[phone]. '\')';

return $this->query($sql);

}

// Execute MySQL query here

public function query($query, $mode = MYSQLI_STORE_RESULT) {

$this->ping();

$res = parent::query($query, $mode);

return $res;

}

}

// Interface HttpScraper

interface HttpScraper

{

public function parse($body, $head);

}

// Class Scraper

class Scraper implements HttpScraper {

private $_table;

// Store MySQL table if want to write to database.

public function __construct($t = null) {

$this->setTable($t);

}

// Delete table info at descructor

public function __destruct() {

if ($this->_table !== null) {

$this->_table = null;

}

}

// Set table info to private variable $_table

public function setTable($t) {

if ($t === null || $t instanceof MySQLTable)

$this->_table = $t;

}

// Get table info

public function getTable() {

return $this->_table;

}

// Parse function

public function parse($body, $head) {

if ($head == 200) {

$p = preg_match_all(TARGET_BLOCK, $body, $blocks);

if ($p) {

foreach($blocks[0] as $block) {

$agent[name] = $this->matchPattern(NAME, $block, 2);

$agent[email] = $this->matchPattern(EMAIL, $block, 1);

$agent[phone] = $this->matchPattern(PHONE, $block, 1);

// echo "<pre>"; print_r($agent); echo "</pre>";

$this->_table->addData($agent);

}

}

}

}

// Return matched info

public function matchPattern($pattern, $content, $pos) {

if (preg_match($pattern, $content, $match)) {

return $match[$pos];

}

}

}

// Class HttpCurl

class HttpCurl {

protected $_cookie, $_parser, $_timeout;

private $_ch, $_info, $_body, $_error;

// Check curl activated

// Set Parser as well

public function __construct($p = null) {

if (!function_exists('curl_init')) {

throw new Exception('cURL not enabled!');

}

$this->setParser($p);

}

// Get web page and run parser

public function get($url, $status = FALSE) {

$this->request($url);

if ($status === TRUE) {

return $this->runParser($this->_body, $this->getStatus());

}

}

// Run cURL to get web page source file

protected function request($url) {

$ch = curl_init($url);

curl_setopt($ch, CURLOPT_FOLLOWLOCATION, TRUE);

curl_setopt($ch, CURLOPT_MAXREDIRS, 5);

curl_setopt($ch, CURLOPT_RETURNTRANSFER, TRUE);

curl_setopt($ch, CURLOPT_URL, $url);

$this->_body = curl_exec($ch);

$this->_info = curl_getinfo($ch);

$this->_error = curl_error($ch);

curl_close($ch);

}

// Get http_code

public function getStatus() {

return $this->_info[http_code];

}

// Get web page header information

public function getHeader() {

return $this->_info;

}

// Get web page content

public function getBody() {

return $this->_body;

}

public function __destruct() {

}

// set parser, either object or callback function

public function setParser($p) {

if ($p === null || $p instanceof HttpScraper || is_callable($p))

$this->_parser = $p;

}

// Execute parser

public function runParser($content, $header) {

if ($this->_parser !== null)

{

if ($this->_parser instanceof HttpScraper)

$this->_parser->parse($content, $header);

else

call_user_func($this->_parser, $content, $header);

}

}

}

?>

2. test.php

<?php

include 'httpcurl.php'; // include lib file

$target = "http://<website domain>/negotiators?page="; // Set our target's url, remember not to include nu,ber in pagination

$startPage = $target . "1"; // Set first page

$scrapeContent = new Scraper;

$firstPage = new HttpCurl();

$firstPage->get($startPage); // get first page content

if ($firstPage->getStatus() === 200) {

$lastPage = $scrapeContent->matchPattern(LASTPAGE, $firstPage->getBody(), 1); // get total page info from first page

}

$db = new EmailDatabase; // can be excluded if do not want to write to database

$scrapeContent = new Scraper($db); // // can be excluded as well

$pages = new HttpCurl($scrapeContent);

// Looping from first page to last and parse each and every pages to database

for($i=1; $i <= $lastPage; $i++) {

$targetPage = $target . $i;

$pages->get($targetPage, PARSE_CONTENT);

}

?>