Most webmasters welcome webbot from search engines such as Google and Bing. Contents of their websites will be indexed by search engines and users can easily find thier websites. However, they surely not welcome your web spider to extract data from their sites, may be you're up to no good, such as how products from etsy.com were used to drive traffic to a Hong Kong website. Most likely your IP will be blocked if webmasters detect unknown web spider aggressively crawling their websites. Way back to 2001, eBay filed legal action against Bidder's Edge, an auction scraping site, for “deep linking” into its listings and bombarding its service; Craigslist has throttle mechanism to prevent web crawlers from overwhelming the site with requests;

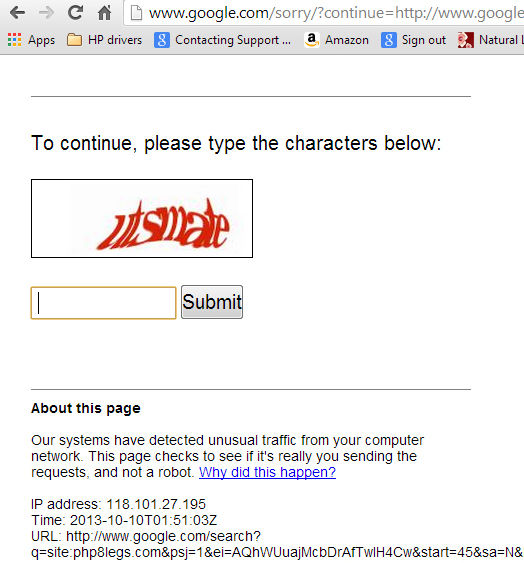

Even big spiders like Google has mechanism to prevent others from scraping their content. Try to search a keyword and at the results page, click page 1, then page 2, page 3,...At page 20 (in my case), Google will stop displaying search results and want to find out are you a human or webbot that reading the page. If you unable enter correct captcha, then your IP will be blocked eventually.

Note: Check out the sample code at bottom of this article.

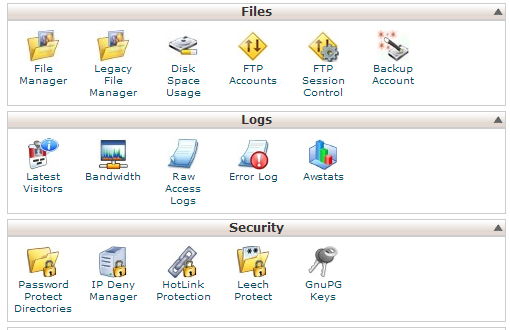

So how can we fly under the radar when scraping websites? First, let's check how we can detect our web spider by runnung earlier script to own website. Login to cPanel and click on "Latest Visitors".

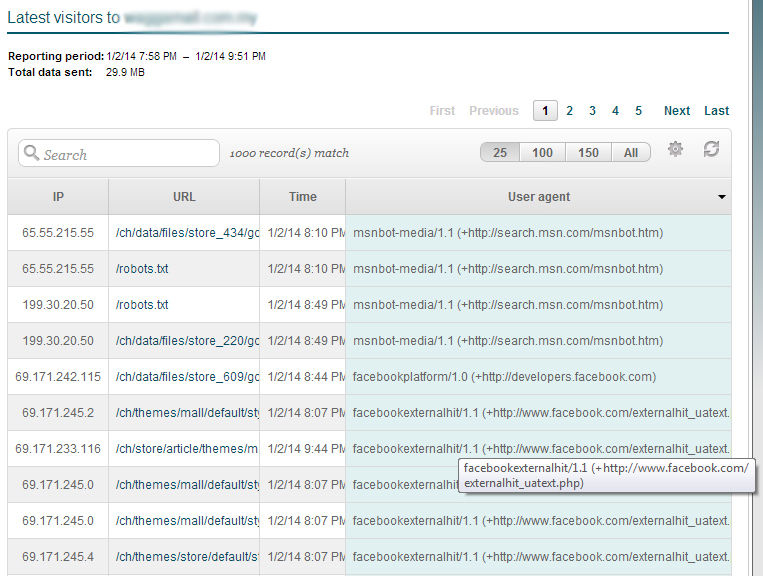

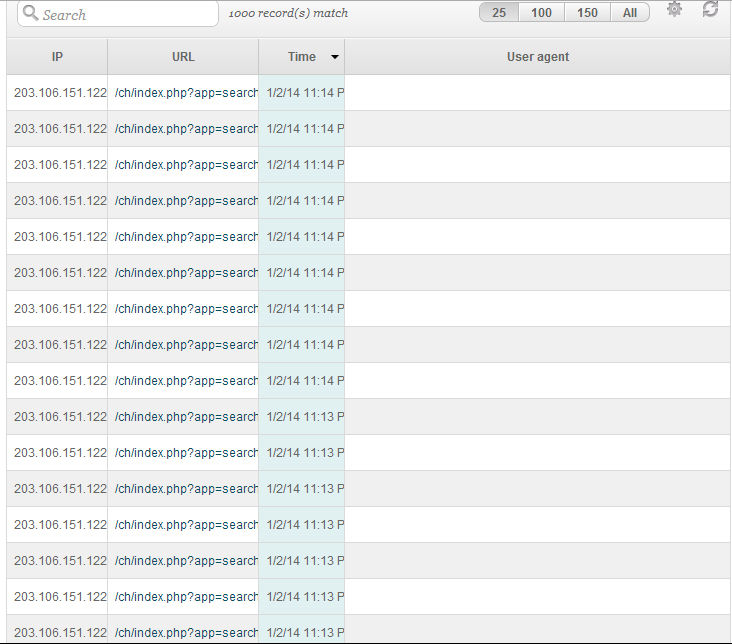

You can see a table with informtion below. For this tutorial, I only display IP, URL, Time and User Agent.

I executed the web spider script to crawl this website. Click on "Time" menu and here is the output.

I executed the web spider script to crawl this website. Click on "Time" menu and here is the output.

My dynamic IP was 203.106.151.122 when running the script. So any webmaster can easily detect same IP with no user agent and requesting many pages within a minute. We can do somethings to hide our web spider.

Here are some rules and changes to our previous script:

1) Respect website you performing scraping

Do not overload the targeted server and consume too much bandwidth. Web admin will be able to detect your IP and User Agent from log file. They will see continuous requests to server within very short interval.

We can insert random waiting time between requests, from a few seconds to minutes. Your script will take much longer time to complete but less risk for detection.

In our previous script, extract.php

for($i=1; $i <= $lastPage; $i++) {

$targetPage = $target . $i;

$pages->get($targetPage, PARSE_CONTENT);

sleep(rand(15, 45)); // delay 15 to 45 seconds to prevent detection by system admin

}

In this example, sleep(rand(15, 45)); is added into loop and the script will pause around min 15 or max 45 seconds before sending next request.

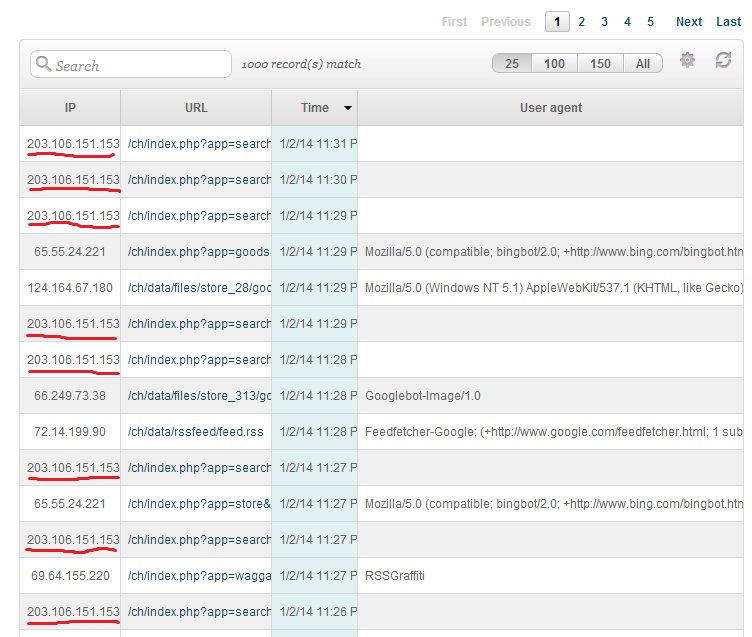

As you can see, my dynamic IP was 203.106.151.122, there are other requests (either human or webbots) between my two requests. Now we move to user agent.

2) Randomize user agent for every request

Many ISP's use Dynamic Host Configuration Protocol (DHCP) where same IP is shared by multiple users. We can made website admin difficult to detect by randomizing user agent name for every requests to the server. This make it looks like multiple users are browsing the same website from one ISP.

private function getRandomAgent() {

$agents = array('Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/31.0.1650.63 Safari/537.36',

'Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)',

'Mozilla/5.0 (Windows NT 6.1; WOW64; rv:21.0) Gecko/20100101 Firefox/21.0',

'Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; QQDownload 718; .NET CLR 2.0.50727; .NET CLR 3.0.4506.2152; .NET CLR 3.5.30729)',

'Mozilla/5.0 (compatible; bingbot/2.0; +http://www.bing.com/bingbot.htm)',

'Mozilla/5.0 (X11; U; Linux x86_64; en-US; rv:1.9) Gecko Minefield/3.0',

'Mozilla/5.0 (Windows; U; Windows NT 5.1; en-US; rv:1.9.2) Gecko/20100115 Firefox/3.6',

'Mozilla/5.0 (iPhone; CPU iPhone OS 5_1_1 like Mac OS X) AppleWebKit/534.46 (KHTML, like Gecko) Mobile/9B206',

'Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Trident/5.0; MATP; MATP)',

'Mozilla/5.0 (Windows NT 6.1; rv:22.0) Gecko/20100101 Firefox/22.0');

$agent = $agents[array_rand($agents)];

return $agent;

}

Add function getRandomAgent() in class HttpCurl in httpcurl.php

protected function request($url) {

$ch = curl_init($url);

$agent = $this->getRandomAgent();

curl_setopt($ch, CURLOPT_USERAGENT, $agent);

curl_setopt($ch, CURLOPT_FOLLOWLOCATION, TRUE);

curl_setopt($ch, CURLOPT_MAXREDIRS, 5);

curl_setopt($ch, CURLOPT_RETURNTRANSFER, TRUE);

curl_setopt($ch, CURLOPT_URL, $url);

$this->_body = curl_exec($ch);

$this->_info = curl_getinfo($ch);

$this->_error = curl_error($ch);

curl_close($ch);

}

Two lines, $agent = $this->getRandomAgent() and curl_setopt($ch, CURLOPT_USERAGENT, $agent) added to function request().

Now the output looks like this.

Our spider is now just like any other requests in the log file except IP address.

3) Using proxy to hide your IP

We are not able to spoof IP address at PHP programming level. That is we are unable to send fake IP address to targeted server and get response with PHP/cURL. If you don't want your IP to be logged at targeted server, you can run web scraping script through proxy server. Here is a list of free proxies you can use. However, you might encounter connection stability, speed and other issues with free proxies. No issue for one off scraping or small projects but you can use paid proxy if you are serious in this business. Michael Schrenk has a good writing (Chapter 27) on proxy in his book, Webbots, Spider, Screen Scraper (2nd Edition).

If you have a list of proxy servers, then you can randomizing script execution through different server with each request.

private function getRandomProxy() {

$proxies = array('202.187.160.140:3128',

'175.139.208.131:3128',

'60.51.218.180:8080');

$proxy = $proxies[array_rand($proxies)];

return $proxy;

}

Add a new function getRandomProxy() to class HttpCurl in httpcurl.php.

protected function request($url) {

$ch = curl_init($url);

$agent = $this->getRandomAgent();

curl_setopt($ch, CURLOPT_USERAGENT, $agent);

$proxy = $this->getRandomProxy();

curl_setopt($ch, CURLOPT_PROXY, $proxy);

curl_setopt($ch, CURLOPT_FOLLOWLOCATION, TRUE);

curl_setopt($ch, CURLOPT_MAXREDIRS, 5);

curl_setopt($ch, CURLOPT_RETURNTRANSFER, TRUE);

curl_setopt($ch, CURLOPT_URL, $url);

$this->_body = curl_exec($ch);

$this->_info = curl_getinfo($ch);

$this->_error = curl_error($ch);

curl_close($ch);

}

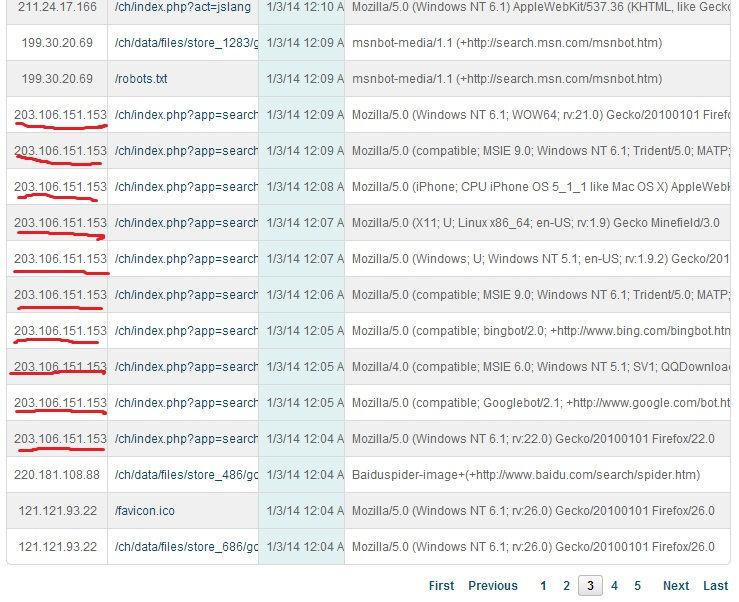

Now calling getRandomProxy() in request and configure cURL with curl_setopt($ch, CURLOPT_PROXY, $proxy). Here is the output:

Now, system admin can no longer find your IP address!!

But where is IP 60.51.218.180 in the log file? This is because cURL not able to made connection with that proxy. In such case, we are not able to get source page when cURL try to connect this IP. curl_exec($ch) will return 0 in this case. So our script need to take care of this situation and retry with different proxy.

If you don't have proxy server, a non technical method is to buy coffee at free wifi cafe, sit at blind spot of CCTV and run your script quietly!

There are many other steps you can fine tuned, for example, we are sending sequential, from page 1, 2, 3 to last page. We can pre-generate or collected targeted urls and store into MySQL. The script can then randomly fetch urls for request.

However, please do not think that no one can trace you with above techniques. Well established websites have advanced tools to analyze their traffic. Ninja or no ninja, you still can be found.

Use the tools constructively and good luck!

Code:

1. httpcurl.php

<?php

// Class HttpCurl

class HttpCurl {

protected $_cookie, $_parser, $_timeout;

private $_ch, $_info, $_body, $_error;

// Check curl activated

// Set Parser as well

public function __construct($p = null) {

if (!function_exists('curl_init')) {

throw new Exception('cURL not enabled!');

}

$this->setParser($p);

}

// Get web page and run parser

public function get($url, $status = FALSE) {

$this->request($url);

if ($status === TRUE) {

return $this->runParser($this->_body, $this->getStatus());

}

}

// Run cURL to get web page source file

protected function request($url) {

$ch = curl_init($url);

$agent = $this->getRandomAgent();

curl_setopt($ch, CURLOPT_USERAGENT, $agent);

$proxy = $this->getRandomProxy();

curl_setopt($ch, CURLOPT_PROXY, $proxy);

curl_setopt($ch, CURLOPT_FOLLOWLOCATION, TRUE);

curl_setopt($ch, CURLOPT_MAXREDIRS, 5);

curl_setopt($ch, CURLOPT_RETURNTRANSFER, TRUE);

curl_setopt($ch, CURLOPT_URL, $url);

$this->_body = curl_exec($ch);

$this->_info = curl_getinfo($ch);

$this->_error = curl_error($ch);

curl_close($ch);

}

// Get http_code

public function getStatus() {

return $this->_info[http_code];

}

// Get web page header information

public function getHeader() {

return $this->_info;

}

// Get web page content

public function getBody() {

return $this->_body;

}

public function __destruct() {

}

// set parser, either object or callback function

public function setParser($p) {

if ($p === null || $p instanceof HttpScraper || is_callable($p))

$this->_parser = $p;

}

// Execute parser

public function runParser($content, $header) {

if ($this->_parser !== null)

{

if ($this->_parser instanceof HttpScraper)

$this->_parser->parse($content, $header);

else

call_user_func($this->_parser, $content, $header);

}

}

private function getRandomAgent() {

$agents = array('Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/31.0.1650.63 Safari/537.36',

'Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)',

'Mozilla/5.0 (Windows NT 6.1; WOW64; rv:21.0) Gecko/20100101 Firefox/21.0',

'Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; QQDownload 718; .NET CLR 2.0.50727; .NET CLR 3.0.4506.2152; .NET CLR 3.5.30729)',

'Mozilla/5.0 (compatible; bingbot/2.0; +http://www.bing.com/bingbot.htm)',

'Mozilla/5.0 (X11; U; Linux x86_64; en-US; rv:1.9) Gecko Minefield/3.0',

'Mozilla/5.0 (Windows; U; Windows NT 5.1; en-US; rv:1.9.2) Gecko/20100115 Firefox/3.6',

'Mozilla/5.0 (iPhone; CPU iPhone OS 5_1_1 like Mac OS X) AppleWebKit/534.46 (KHTML, like Gecko) Mobile/9B206',

'Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Trident/5.0; MATP; MATP)',

'Mozilla/5.0 (Windows NT 6.1; rv:22.0) Gecko/20100101 Firefox/22.0');

$agent = $agents[array_rand($agents)];

return $agent;

}

private function getRandomProxy() {

$proxies = array('202.187.160.140:3128',

'175.139.208.131:3128',

'60.51.218.180:8080');

$proxy = $proxies[array_rand($proxies)];

return $proxy;

}

}

?>

2. image.php

<?php

class Image {

private $_image;

private $_imageFormat;

public function load($imageFile) {

$imageInfo = getImageSize($imageFile);

$this->_imageFormat = $imageInfo[2];

if( $this->_imageFormat === IMAGETYPE_JPEG ) {

$this->_image = imagecreatefromjpeg($imageFile);

} elseif( $this->_imageFormat === IMAGETYPE_GIF ) {

$this->_image = imagecreatefromgif($imageFile);

} elseif( $this->_imageFormat === IMAGETYPE_PNG ) {

$this->_image = imagecreatefrompng($imageFile);

}

}

public function save($imageFile, $_imageFormat=IMAGETYPE_JPEG, $compression=75, $permissions=null) {

if( $_imageFormat == IMAGETYPE_JPEG ) {

imagejpeg($this->_image,$imageFile,$compression);

} elseif ( $_imageFormat == IMAGETYPE_GIF ) {

imagegif($this->_image,$imageFile);

} elseif ( $_imageFormat == IMAGETYPE_PNG ) {

imagepng($this->_image,$imageFile);

}

if( $permissions != null) {

chmod($imageFile,$permissions);

}

}

public function getWidth() {

return imagesx($this->_image);

}

public function getHeight() {

return imagesy($this->_image);

}

public function resizeToHeight($height) {

$ratio = $height / $this->getHeight();

$width = $this->getWidth() * $ratio;

$this->resize($width,$height);

}

public function resizeToWidth($width) {

$ratio = $width / $this->getWidth();

$height = $this->getheight() * $ratio;

$this->resize($width,$height);

}

public function scale($scale) {

$width = $this->getWidth() * $scale/100;

$height = $this->getheight() * $scale/100;

$this->resize($width,$height);

}

private function resize($width, $height) {

$newImage = imagecreatetruecolor($width, $height);

imagecopyresampled($newImage, $this->_image, 0, 0, 0, 0, $width, $height, $this->getWidth(), $this->getHeight());

$this->_image = $newImage;

}

}

?>

3. scraper.php

<?php

/********************************************************

* These are website specific matching pattern *

* Change these matching patterns for each websites *

* Else you will not get any results *

********************************************************/

define('TARGET_BLOCK','~<div class="negotiators-wrapper">(.*?)</div>(\r\n)</div>~s');

define('NAME', '~<div class="negotiators-name"><a href="/negotiator/(.*?)">(.*?)</a></div>~');

define('EMAIL', '~<div class="negotiators-email">(.*?)</div>~');

define('PHONE', '~<div class="negotiators-phone">(.*?)</div>~');

define('LASTPAGE', '~<li class="pager-last last"><a href="/negotiators\?page=(.*?)"~');

define('IMAGE', '~<div class="negotiators-photo"><a href="/negotiator/(.*?)"><img src="/(.*?)"~');

define('PARSE_CONTENT', TRUE);

define('IMAGE_DIR', 'c:\\xampp\\htdocs\\scraper\\image\\');

// Interface MySQLTable

interface MySQLTable {

public function addData($info);

}

// Class EmailDatabase

// Use the code below to crease table

/*****************************************************

CREATE TABLE IF NOT EXISTS `contact_info` (

`id` int(12) NOT NULL AUTO_INCREMENT,

`name` varchar(128) NOT NULL,

`email` varchar(128) NOT NULL,

`phone` varchar(128) NOT NULL,

PRIMARY KEY (`id`),

UNIQUE KEY `email` (`email`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

*******************************************************/

class EmailDatabase extends mysqli implements MySQLTable {

private $_table = 'contact_info'; // set default table

// Connect to database

public function __construct() {

$host = 'localhost';

$user = 'root';

$pass = '';

$dbname = 'email_collection';

parent::__construct($host, $user, $pass, $dbname);

}

// Use this function to change to another table

public function setTableName($name) {

$this->_table = $name;

}

// Write data to table

public function addData($info) {

$sql = 'INSERT IGNORE INTO ' . $this->_table . ' (name, email, phone, image) ';

$sql .= 'VALUES (\'' . $info[name] . '\', \'' . $info[email] . '\', \'' . $info[phone]. '\', \'' . $info[image] .'\')';

return $this->query($sql);

}

// Execute MySQL query here

public function query($query, $mode = MYSQLI_STORE_RESULT) {

$this->ping();

$res = parent::query($query, $mode);

return $res;

}

}

// Interface HttpScraper

interface HttpScraper

{

public function parse($body, $head);

}

// Class Scraper

class Scraper implements HttpScraper {

private $_table;

// Store MySQL table if want to write to database.

public function __construct($t = null) {

$this->setTable($t);

}

// Delete table info at descructor

public function __destruct() {

if ($this->_table !== null) {

$this->_table = null;

}

}

// Set table info to private variable $_table

public function setTable($t) {

if ($t === null || $t instanceof MySQLTable)

$this->_table = $t;

}

// Get table info

public function getTable() {

return $this->_table;

}

// Parse function

public function parse($body, $head) {

if ($head == 200) {

$p = preg_match_all(TARGET_BLOCK, $body, $blocks);

if ($p) {

foreach($blocks[0] as $block) {

$agent[name] = $this->matchPattern(NAME, $block, 2);

$agent[email] = $this->matchPattern(EMAIL, $block, 1);

$agent[phone] = $this->matchPattern(PHONE, $block, 1);

$originalImagePath = $this->matchPattern(IMAGE, $block, 2);

$agent[image] = $this->saveImage($originalImagePath, IMAGETYPE_GIF);

$this->_table->addData($agent);

}

}

}

}

// Return matched info

public function matchPattern($pattern, $content, $pos) {

if (preg_match($pattern, $content, $match)) {

return $match[$pos];

}

}

public function saveImage($imageUrl, $imageType = 'IMAGETYPE_GIF') {

if (!file_exists(IMAGE_DIR)) {

mkdir(IMAGE_DIR, 0777, true);

}

if( $imageType === IMAGETYPE_JPEG ) {

$fileExt = 'jpg';

} elseif ( $imageType === IMAGETYPE_GIF ) {

$fileExt = 'gif';

} elseif ( $imageType === IMAGETYPE_PNG ) {

$fileExt = 'png';

}

$newImageName = md5($imageUrl). '.' . $fileExt;

$image = new Image();

$image->load($imageUrl);

$image->resizeToWidth(100);

$image->save( IMAGE_DIR . $newImageName, $imageType );

return $newImageName;

}

}

?>

4. extract.php

<?php

include 'image.php';

include 'scraper.php';

include 'httpcurl.php'; // include lib file

$target = "http://<domain name>/negotiators?page="; // Set our target's url, remember not to include nu,ber in pagination

$startPage = $target . "1"; // Set first page

$scrapeContent = new Scraper;

$firstPage = new HttpCurl();

$firstPage->get($startPage); // get first page content

if ($firstPage->getStatus() === 200) {

$lastPage = $scrapeContent->matchPattern(LASTPAGE, $firstPage->getBody(), 1); // get total page info from first page

}

$db = new EmailDatabase(); // can be excluded if do not want to write to database

$scrapeContent = new Scraper($db); // // can be excluded as well

$pages = new HttpCurl($scrapeContent);

// Looping from first page to last and parse each and every pages to database

for($i=1; $i <= $lastPage; $i++) {

$targetPage = $target . $i;

$pages->get($targetPage, PARSE_CONTENT);

sleep(rand(15, 45)); // delay 15 to 25 seconds to prevent detection by system admin

}

?>